This repository contains the Dirty-MNIST dataset described in Deterministic Neural Networks with Appropriate Inductive Biases Capture Epistemic and Aleatoric Uncertainty.

The official repository for the paper is at https://github.com/omegafragger/DDU.

If the code or the paper has been useful in your research, please add a citation to our work:

@article{mukhoti2021deterministic,

title={Deterministic Neural Networks with Appropriate Inductive Biases Capture Epistemic and Aleatoric Uncertainty},

author={Mukhoti, Jishnu and Kirsch, Andreas and van Amersfoort, Joost and Torr, Philip HS and Gal, Yarin},

journal={arXiv preprint arXiv:2102.11582},

year={2021}

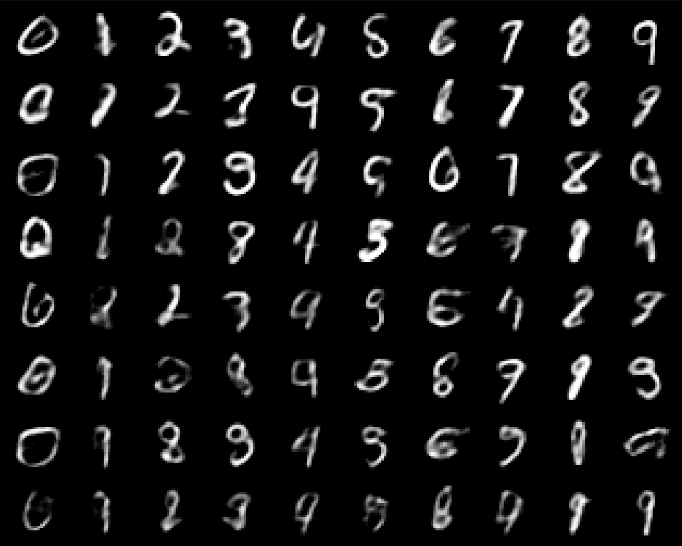

}DirtyMNIST is a concatenation of MNIST and AmbiguousMNIST, with 60k sample-label pairs each in the training set. AmbiguousMNIST contains generated ambiguous MNIST samples with varying entropies: 6k unique samples with 10 labels each.

pip install ddu_dirty_mnist

After installing, you get a Dirty-MNIST train or test set just like you would for MNIST in PyTorch.

import ddu_dirty_mnist

dirty_mnist_train = ddu_dirty_mnist.DirtyMNIST(".", train=True, download=True, device="cuda")

dirty_mnist_test = ddu_dirty_mnist.DirtyMNIST(".", train=False, download=True, device="cuda")

len(dirty_mnist_train), len(dirty_mnist_test)

Create torch.utils.data.DataLoaders with num_workers=0, pin_memory=False for maximum throughput, see the documentation for details.

import torch

dirty_mnist_train_dataloader = torch.utils.data.DataLoader(

dirty_mnist_train,

batch_size=128,

shuffle=True,

num_workers=0,

pin_memory=False,

)

dirty_mnist_test_dataloader = torch.utils.data.DataLoader(

dirty_mnist_test,

batch_size=128,

shuffle=False,

num_workers=0,

pin_memory=False,

)

import ddu_dirty_mnist

ambiguous_mnist_train = ddu_dirty_mnist.AmbiguousMNIST(".", train=True, download=True, device="cuda")

ambiguous_mnist_test = ddu_dirty_mnist.AmbiguousMNIST(".", train=False, download=True, device="cuda")

ambiguous_mnist_train, ambiguous_mnist_test

Again, create torch.utils.data.DataLoaders with num_workers=0, pin_memory=False for maximum throughput, see the documentation for details.

import torch

ambiguous_mnist_train_dataloader = torch.utils.data.DataLoader(

ambiguous_mnist_train,

batch_size=128,

shuffle=True,

num_workers=0,

pin_memory=False,

)

ambiguous_mnist_test_dataloader = torch.utils.data.DataLoader(

ambiguous_mnist_test,

batch_size=128,

shuffle=False,

num_workers=0,

pin_memory=False,

)

Additional Guidance

- The current AmbiguousMNIST contains 6k unique samples with 10 labels each. This multi-label dataset gets flattened to 60k samples. The assumption is that amibguous samples have multiple "valid" labels as they are ambiguous. MNIST samples are intentionally undersampled (in comparison), which benefits AL acquisition functions that can select unambiguous samples.

- Pick your initial training samples (for warm starting Active Learning) from the MNIST half of DirtyMNIST to avoid starting training with potentially very ambiguous samples, which might add a lot of variance to your experiments.

- Make sure to pick your validation set from the MNIST half as well, for the same reason as above.

- Make sure that your batch acquisition size is >= 10 (probably) given that there are 10 multi-labels per samples in Ambiguous-MNIST.

- By default, Gaussian noise with stddev 0.05 is added to each sample to prevent acquisition functions from cheating by disgarding "duplicates".

- If you want to split Ambiguous-MNIST into subsets (or Dirty-MNIST within the second ambiguous half), make sure to split by multiples of 10 to avoid splits within a flattened multi-label sample.