import numpy as np

import matplotlib.pyplot as plt

from sklearn.datasets import make_classification

from sklearn.ensemble import RandomForestClassifier

# Generate synthetic data

np.random.seed(1337)

X, y = make_classification(n_samples=1000, n_features=20)

# Start with a small labeled set

labeled_idx = np.random.choice(len(X), 10, replace=False)

unlabeled_idx = np.setdiff1d(np.arange(len(X)), labeled_idx)

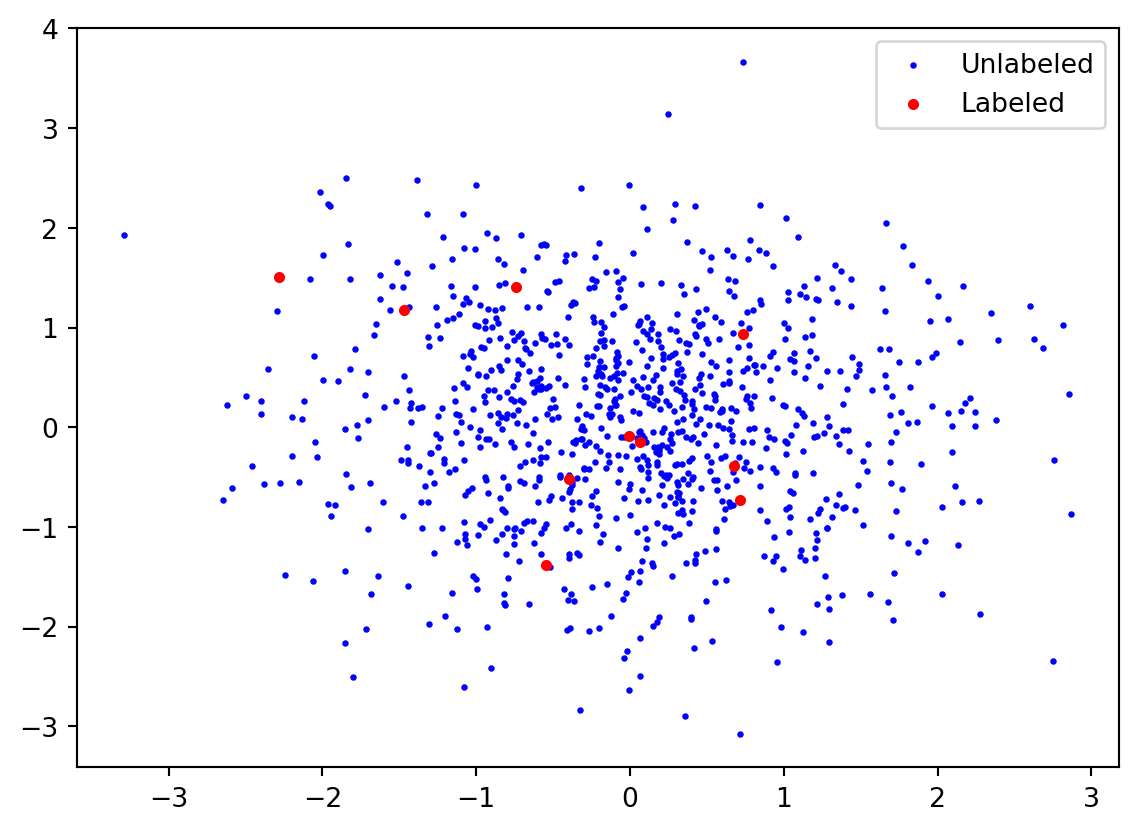

# Plot the data

plt.scatter(X[unlabeled_idx, 0], X[unlabeled_idx, 1], c='blue', label='Unlabeled', s=2)

plt.scatter(X[labeled_idx, 0], X[labeled_idx, 1], c='red', label='Labeled', s=10)

plt.legend()

plt.show()Bayesian Active Learning

Information Theory and Uncertainty Quantification

About Me

Andreas Kirsch

- DPhil (Oxford, 2023) on Active Learning, Information Theory and Uncertainty Quantification (Kirsch 2024)

- Researcher at Midjourney, now DeepMind

- Prior: Research Engineer at DeepMind

Outline Now

- What you’ll learn here and the big picture

- What active learning is (and isn’t) and why it matters

- Course logistics

Empowerment Promise

By the end of this course, you’ll be able to:

- Design AI systems that learn efficiently from minimal labeled data

- Quantify and reason about uncertainty in deep learning models

- Apply information theory to guide optimal data selection

- Implement and scale active learning for real scientific applications

The Big Picture

Key Question

How can we make AI systems learn more efficiently from less data?

Consider training a medical imaging AI system:

- Each labeled example requires expert radiologist time ($200+/hour)

- Need thousands of examples for good performance

Key Insight: Not all examples are equally valuable for learning

What Active Learning Is (And Isn’t)

Active Learning IS:

- Strategic data selection

- Interactive learning

- Uncertainty-guided sampling

Active Learning IS NOT:

- Data augmentation

- Semi-supervised learning

- Transfer learning

Our Journey Together

Foundations (Weeks 1 & 2)

- Active learning fundamentals

- Information theory basics

- Bayesian neural networks and uncertainty

Advanced Methods (Weeks 3)

- Batch acquisition strategies

- Prediction-oriented selection

- Scalable approximations

Course Goals

- Build strong information-theoretic foundations for active learning

- Master uncertainty quantification methods for deep learning

- Understand modern active learning approaches like BatchBALD and EPIG

- Develop practical skills in scaling active learning to large datasets

- Ability to apply active learning to real scientific problems

Prerequisites and Support

Expected Background

- Basic probability theory

- Python programming

- Machine learning fundamentals

Available Support

- Office hours

- Tutors

- Extra reading material

- quizzes before lectures on the previous lecture (ungraded)

- 2 weekly assignments (graded)

- for non-coding questions:

- hand-written solutions to be handed in

- for coding questions:

- hand-written overview to be handed in

- Python code for implementation tasks to be sent in

- for non-coding questions:

- 1 final exam (graded)

Why This Course Matters

Real-World Impact

- Drug discovery: Each experiment costs thousands of dollars

- Protein folding: “One PhD student per protein structure”

- Climate modeling: Limited high-quality historical data

- AI safety: Collecting human feedback is expensive

For Your Future

- Growing demand for efficient ML in scientific domains

- Critical skill for research and industry

- Bridges theory and practical implementation

This Week’s Goals

- Understand why active learning matters

- See how information theory guides data selection

- Implement a simple active learning loop

- Connect theory to practical applications

Success Strategy

- Focus on intuition before formalism

- Engage with assignments and coding exercises

- Connect concepts to your background

- Ask questions early and often

- Participate in discussions

How to Succeed in This Course

Engage Actively

- Complete weekly assignments

- Participate in discussions

- Work through code examples

Build Foundations

- Master information theory basics

- Understand uncertainty types

- Practice implementing methods

Connect Ideas

- Link theory to applications

- Relate to your research interests

- Share insights with classmates

Let’s Begin: A Simple Example

This simple example will grow into sophisticated active learning strategies throughout the course.

Think About

How would you choose which points to label next?

Let’s Begin: A Simple Example

Questions to Ponder

- Why might random sampling be inefficient?

- How do humans choose what data to learn from?

- What makes some examples more informative than others?

Other Questions?

Important

Remember: Your questions help everyone learn!

Preview

Next lectures:

- A deep dive into active learning fundamentals

- A unified framework for information theory

- Your first active learning implementation

Key Message

Active learning isn’t just about saving resources—it’s about making AI systems more efficient and effective learners.

Summary

This overview:

- Established the core problem: learning efficiently from minimal labeled data

- Clarified what active learning is (and isn’t) from a high-level perspective

- Connected theory to real applications in drug discovery, protein folding, and climate modeling

- Set up the learning path through foundations, methods, and applications