TLDR: In Active Learning we use a “human in the loop” approach to data labelling,

reducing the amount of data that needs to be labelled drastically, and

making machine learning applicable when labelling costs would be too high otherwise.

In our paper

What’s Active Learning?

Using deep learning and a large labelled dataset, we are able to obtain excellent performance on a range of important tasks. Often, however, we only have access to a large unlabelled dataset. For example, it is easy to acquire lots of stock photos, but labelling these images is time consuming and expensive. This excludes many applications from benefiting from recent advances in deep learning.

In Active Learning we only ask experts to label the most informative data points instead of labelling the whole dataset upfront. The model is then retrained using these newly acquired data points and all previously labelled data points. This process is repeated until we are happy with the accuracy of our model.

To perform Active Learning, we need to define some measure of informativeness, which is often done in the form of an acquisition function. This measure is called an “acquisition function” because the score it computes determines which data points we want to acquire. We send unlabelled data points which maximise the acquisition function to an expert and ask for labels.

The problem is…

Usually, the informativeness of unlabelled points is assessed individually,

with one popular acquisition function being BALD

Our contribution

In our work, we efficiently expand the notion of acquisition functions to batches (sets) of data points and develop a new acquisition function that takes into account similarities between data points when acquiring a batch. For this, we take the commonly-used BALD acquisition function and extend it to BatchBALD in a grounded way, which we will explain below.

However, knowing how to score batches of points is not sufficient! We still have the challenge of finding the batch with the highest score. The naive solution would be to try all subsets of data points, but that wouldn’t work because there are exponentially many possibilities.

For our acquisition function, we found that it satisfies a very useful property called submodularity which allows us to follow a greedy approach: selecting points one by one, and conditioning each new point on all points previously added to the batch. Using the submodularity property, we can show that this greedy approach finds a subset that is “good enough” (i.e. -approximate).

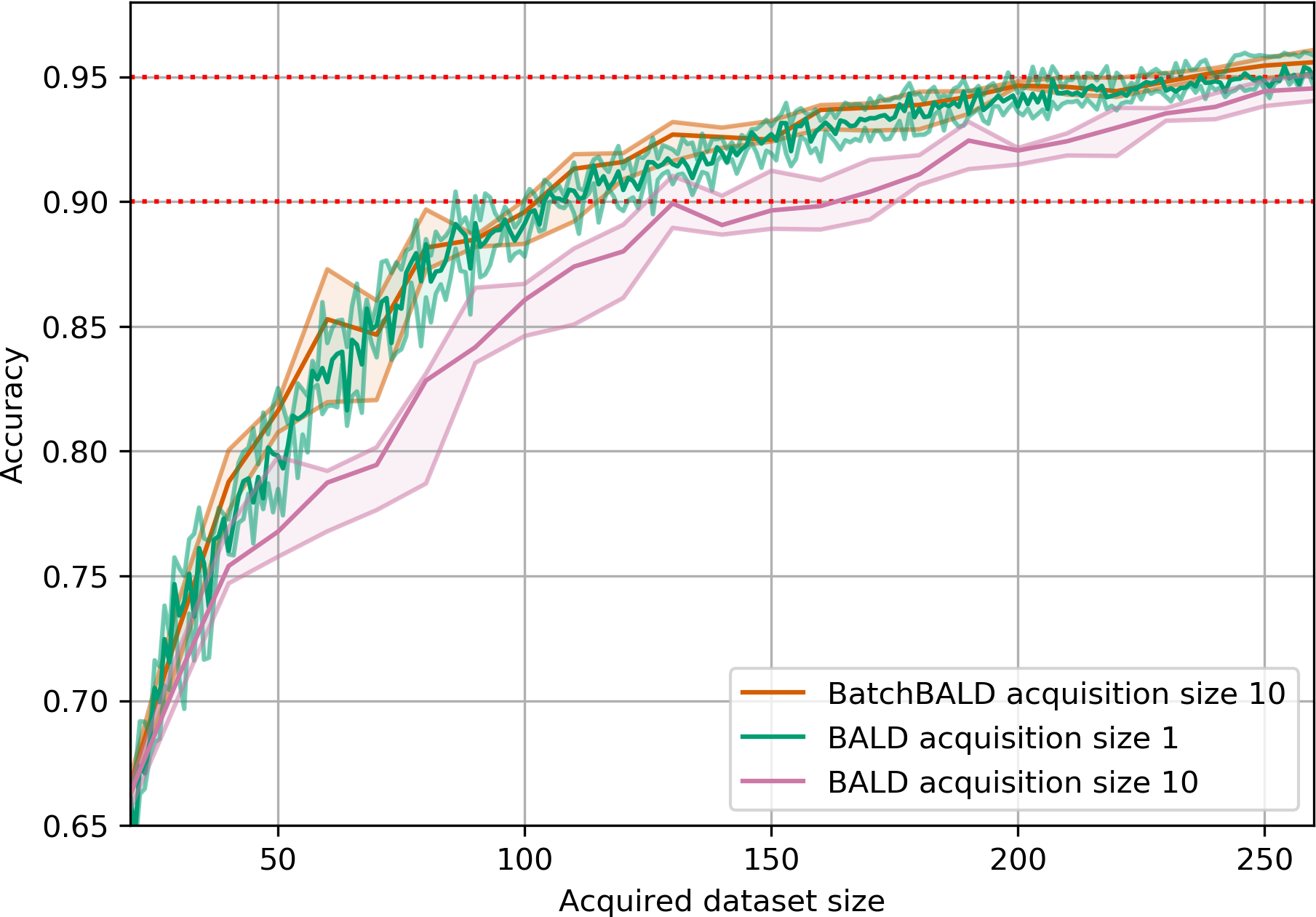

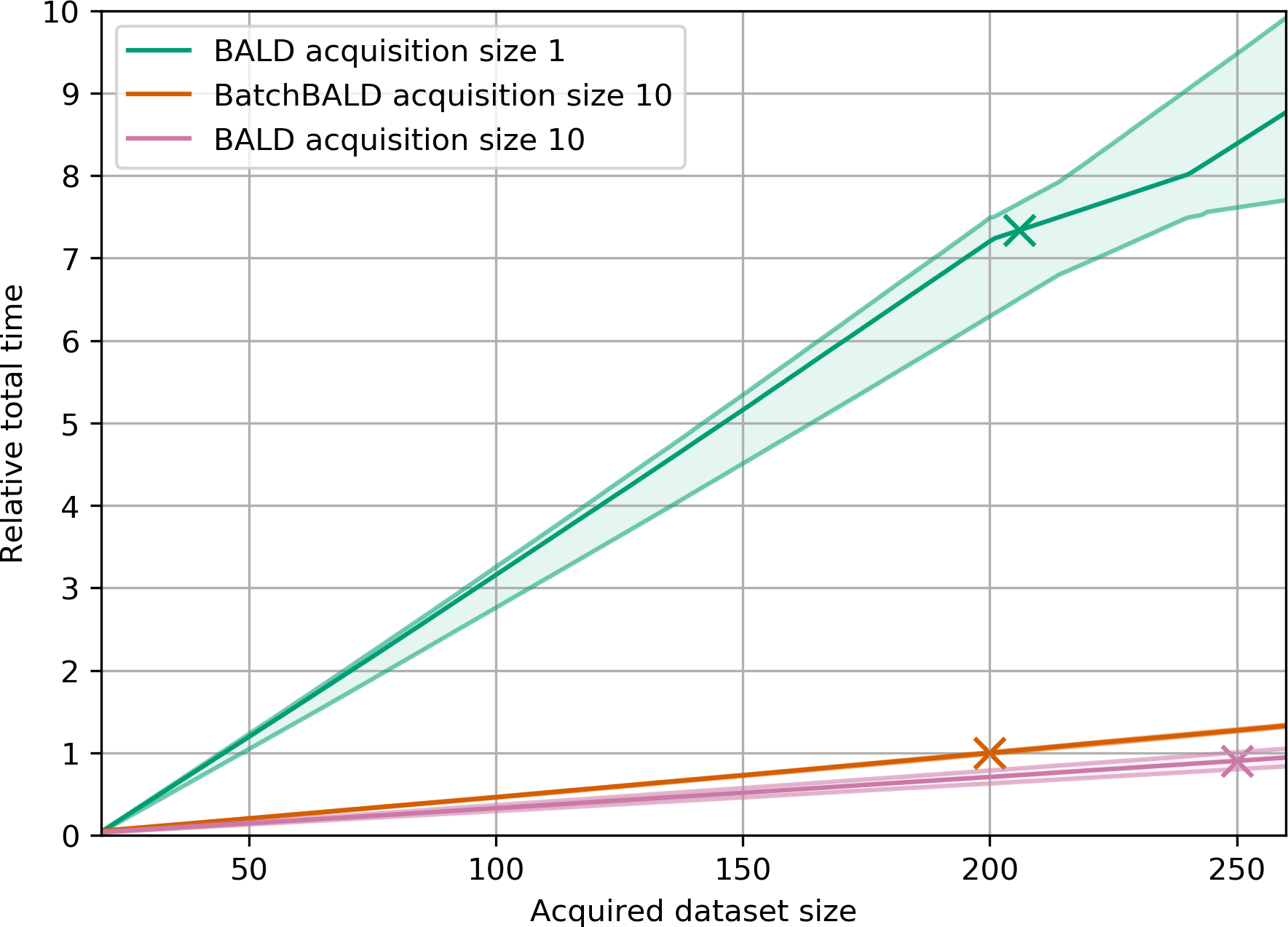

Overall, this leads our acquisition function BatchBALD to outperform BALD: it needs fewer iterations and fewer data points to reach high accuracy for similar batch sizes, significantly reducing redundant model retraining and expert labelling, hence cost and time.

Moreover, it is empirically as good as, but much faster than, the optimal choice of acquiring individual points sequentially, where we retrain the model after every single point acquisition.

Before we explain our acquisition function, however, we need to understand what the BALD acquisition function does.

What’s BALD?

BALD stands for “Bayesian Active Learning by Disagreement”

As the “Bayesian” in the name tells us, this assumes a Bayesian setting which allows us to capture uncertainties in the predictions of our model. In a Bayesian model, the parameters are not just numbers (point estimates) that get updated during training but probability distributions.

This allows the model to quantify its beliefs: a wide distributions for a parameter means that the model is uncertain about its true value, whereas a narrow one quantifies high certainty.

BALD scores a data point based on how well the model’s predictions inform us about the model parameters . For this, it computes the mutual information . Mutual information is well-known in information theory and captures the information overlap between quantities.

When using the BALD acquisition function to select a batch of points, we select the top- points with highest BALD scores, which is standard practice in the field. This is the same as maximising the following batch acquisition function with Intuitively, if we imagine the information content of the predictions given some data points and the model parameters as sets in the batch case, the mutual information can be seen as intersection of these sets, which captures the notion that mutual information measures the information overlap.

In fact, Yeung

BatchBALD

In order to avoid double-counting, we want to compute the quantity , as depicted in figure 6, which corresponds to the mutual information between the joint of the and : Expanding the definition of the mutual information, we obtain the difference between the following two terms: The first term captures the general uncertainty of the model. The second term captures the expected uncertainty for a given draw of the model parameters.

We can see that the score is going to be large when the model has different explanations for the data point that it is confident about individually (yielding a small second term) but the predictions are disagreeing with each other (yielding a large first term), hence the “by Disagreement” in the name.

Submodularity

Now to determine which data points to acquire, we are going to use submodularity.

Nemhauser et al.

The greedy algorithm starts with an empty batch and computes for all unlabelled data points, adds the highest-scoring to and repeats this process until is of acquisition size.

This is explained in more detail in the paper.

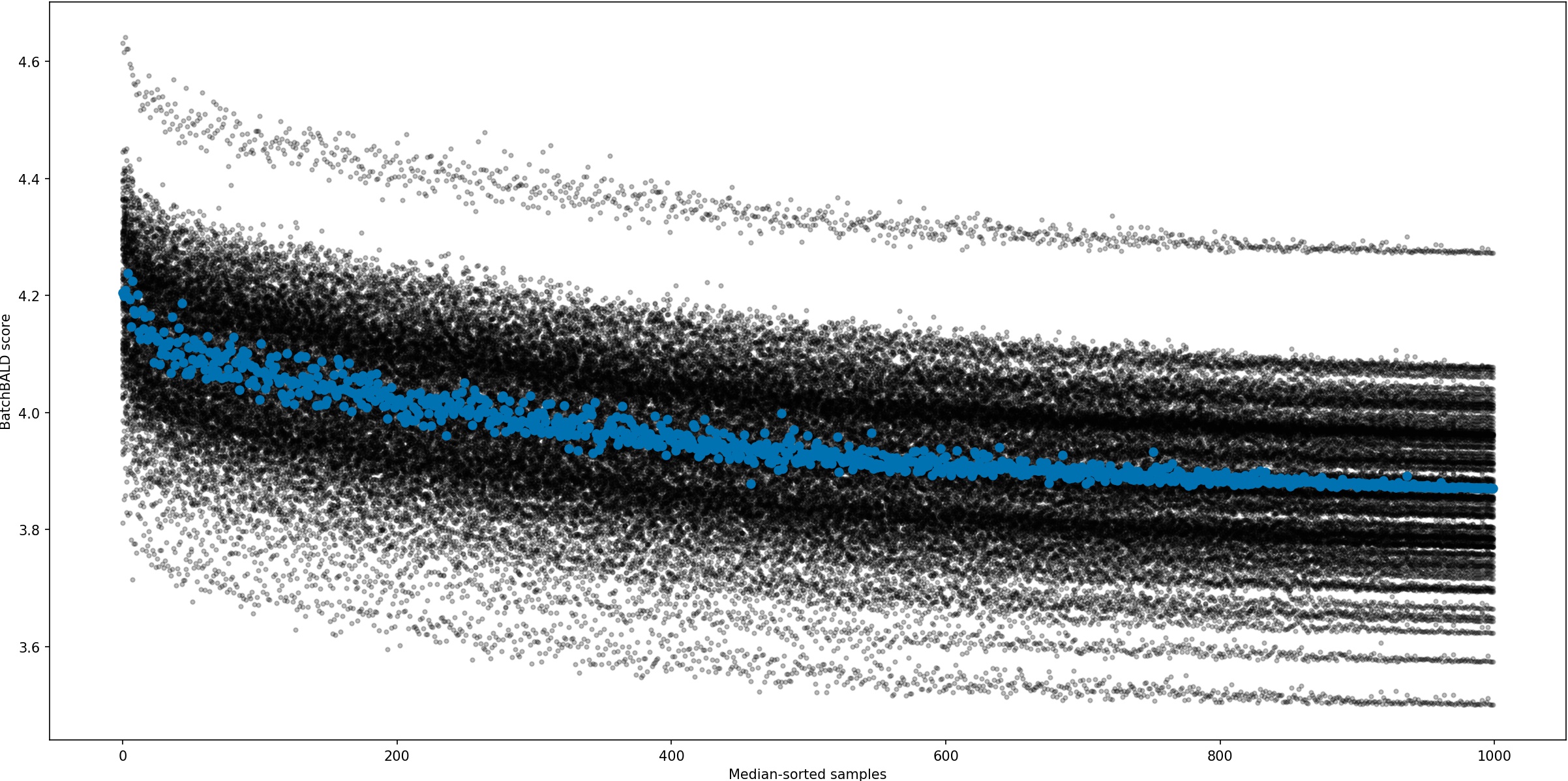

Consistent MC Dropout

We implement Bayesian neural networks using MC dropout

To see why, we have investigated how the scores change with different sets of sampled model parameters being used in MC dropout inference in figure 7.

Without consistent MC dropout, scores would be sampled using different sets of sampled model parameters, losing function correlations between the ’s for near-by ’s, and would essentially be no different than random acquisition given the spread of their scores.

Experiments on MNIST, Repeated MNIST and EMNIST

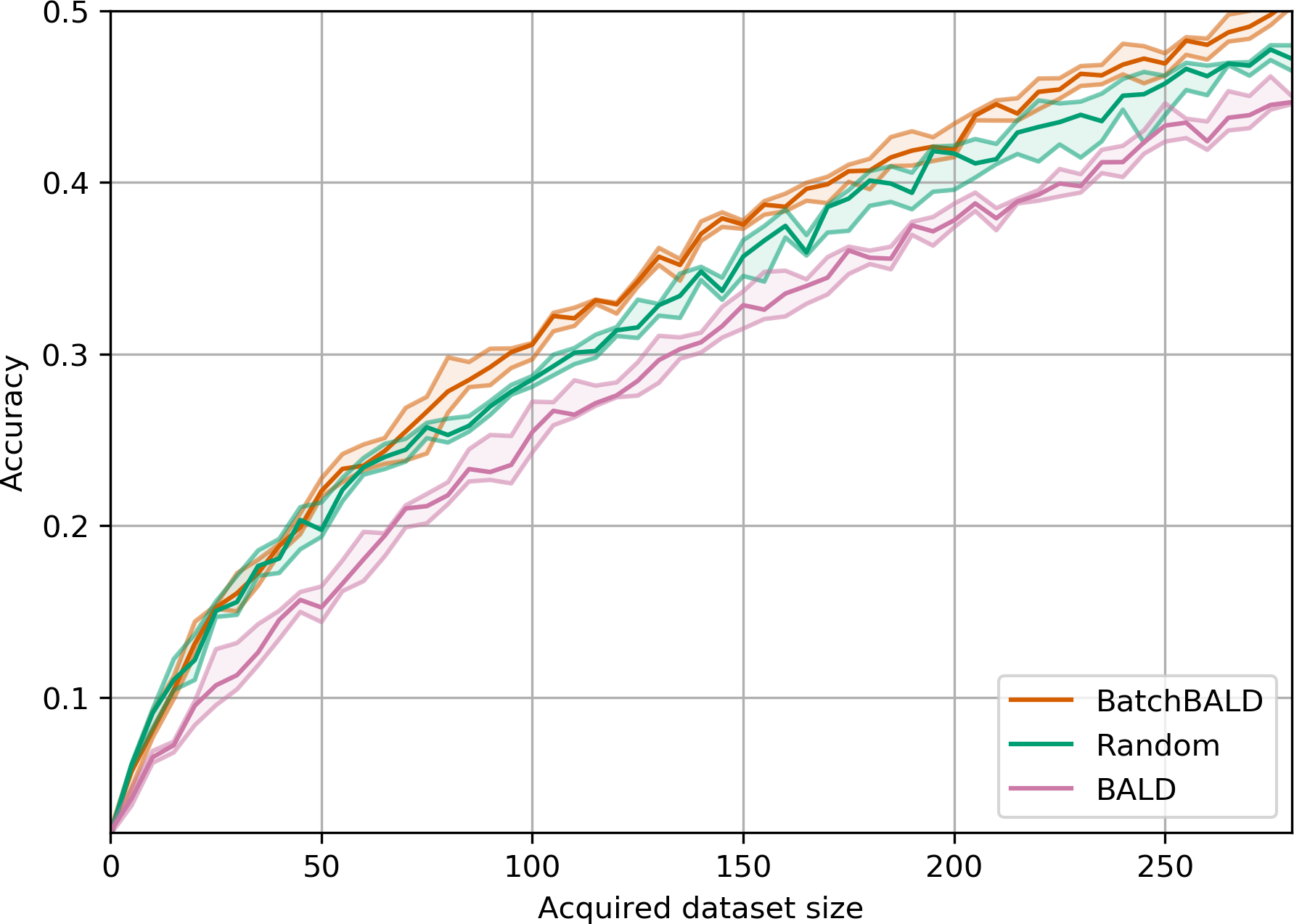

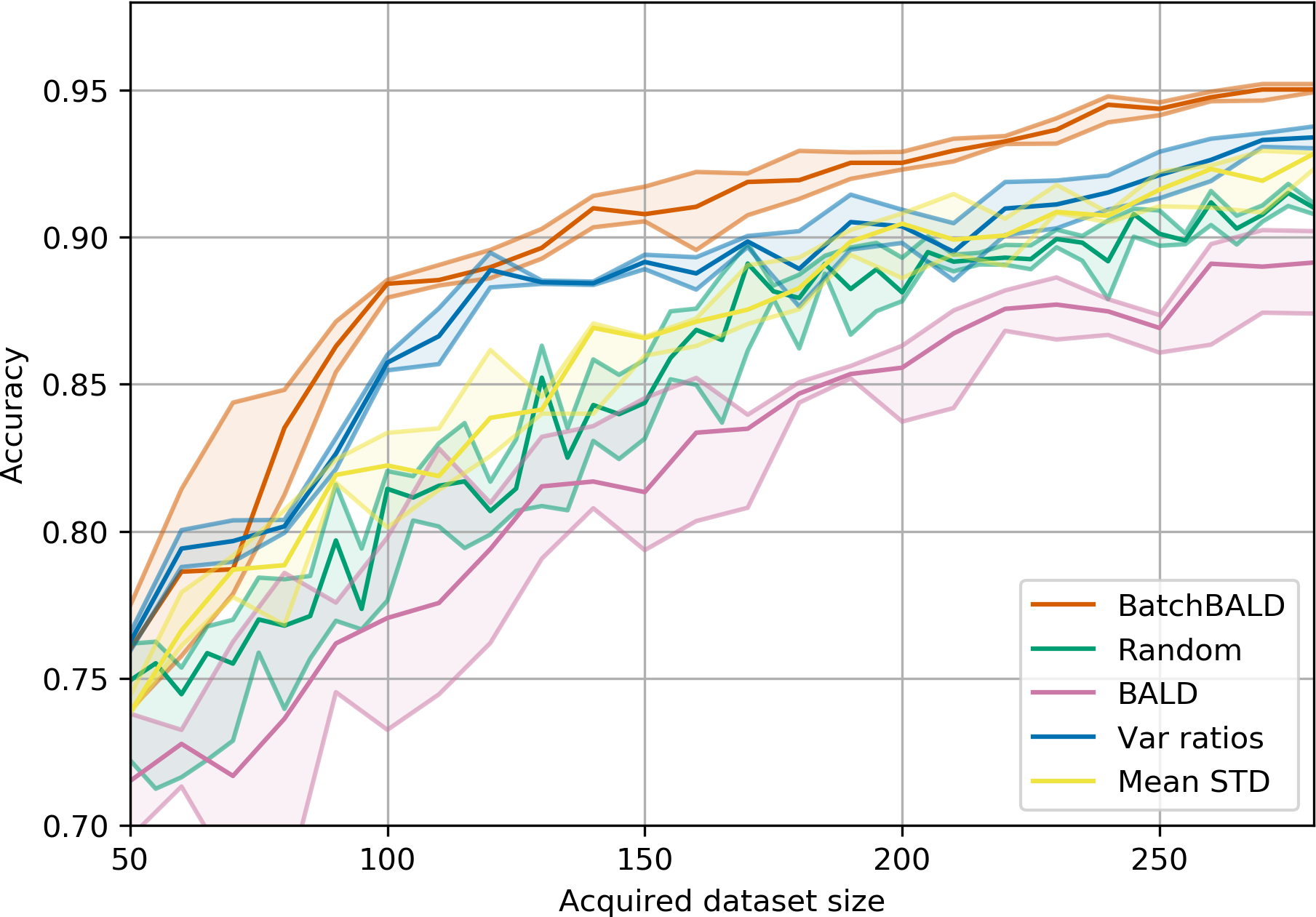

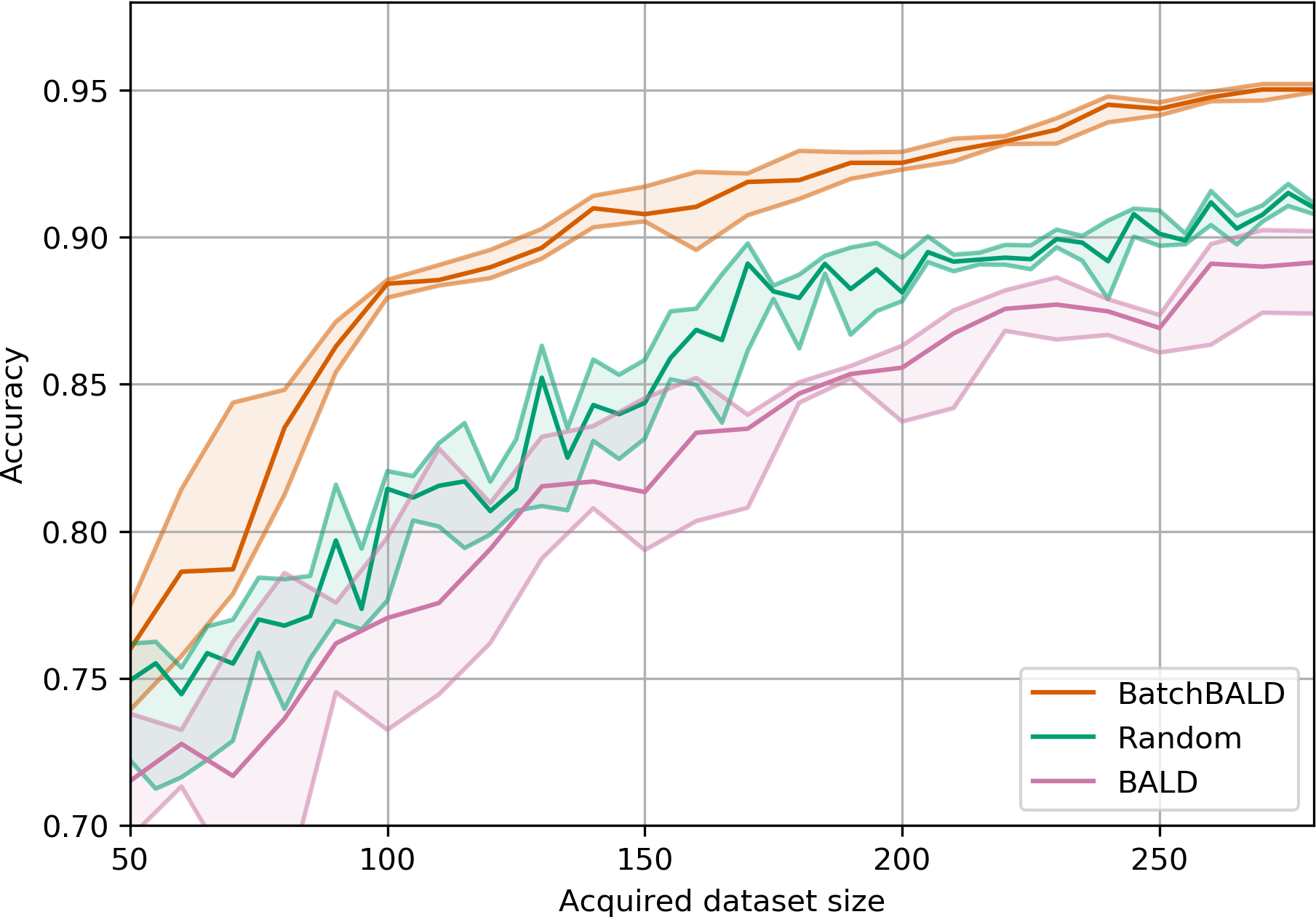

We have run experiments on classifying EMNIST, which is a dataset of handwritten letters and digits consisting of 47 classes and 120000 data points.

We can show improvement over BALD which performs worse (even compared to random acquisition!) when acquiring large batches:

This is because compared to BatchBALD and random, BALD actively selects redundant points. To understand this better, we can look at the acquired class labels and compute the entropy of their distribution. The higher the entropy, the more diverse the acquired labels are:

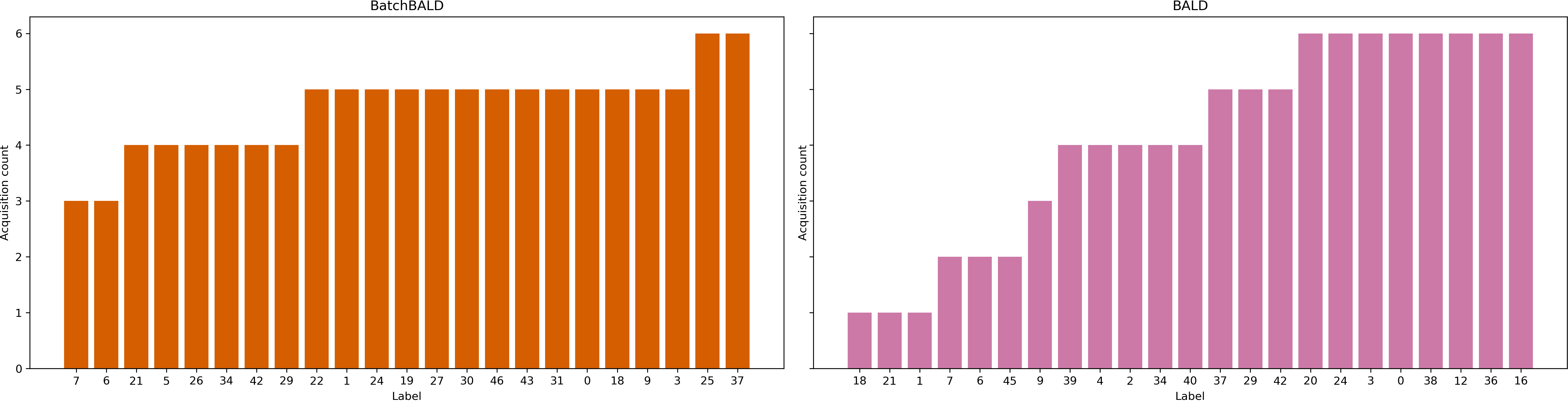

We can also look at the actual distribution of acquired classes

at the end of training, and

see

that BALD undersamples some classes while BatchBALD manages to pick data points from different classes more

uniformly

(without knowing the classes, of course).

Figure 14: Histogram of acquired class labels on EMNIST.

BatchBALD left, random acquisition center, and BALD right. Classes are sorted by number of acquisitions.

Several EMNIST classes are underrepresented in BALD and random acquisition while BatchBALD acquires classes

more uniformly.

The histograms were created from all acquired points.

Figure 14: Histogram of acquired class labels on EMNIST.

BatchBALD left, random acquisition center, and BALD right. Classes are sorted by number of acquisitions.

Several EMNIST classes are underrepresented in BALD and random acquisition while BatchBALD acquires classes

more uniformly.

The histograms were created from all acquired points.

To see how much better BatchBALD copes with pathological cases, we also experimented with a version of MNIST that

we

call Repeated MNIST.

It is simply MNIST repeated 3 time with some added Gaussian noise and shows how BALD falls into a trap where picking the top

individual points is detrimental because there are too many similar points.

Figure 15: Performance on Repeated MNIST.

BALD, BatchBALD, Var Ratios, Mean STD and random acquisition: acquisition size 10 with 10 MC dropout samples.

Figure 15: Performance on Repeated MNIST.

BALD, BatchBALD, Var Ratios, Mean STD and random acquisition: acquisition size 10 with 10 MC dropout samples.

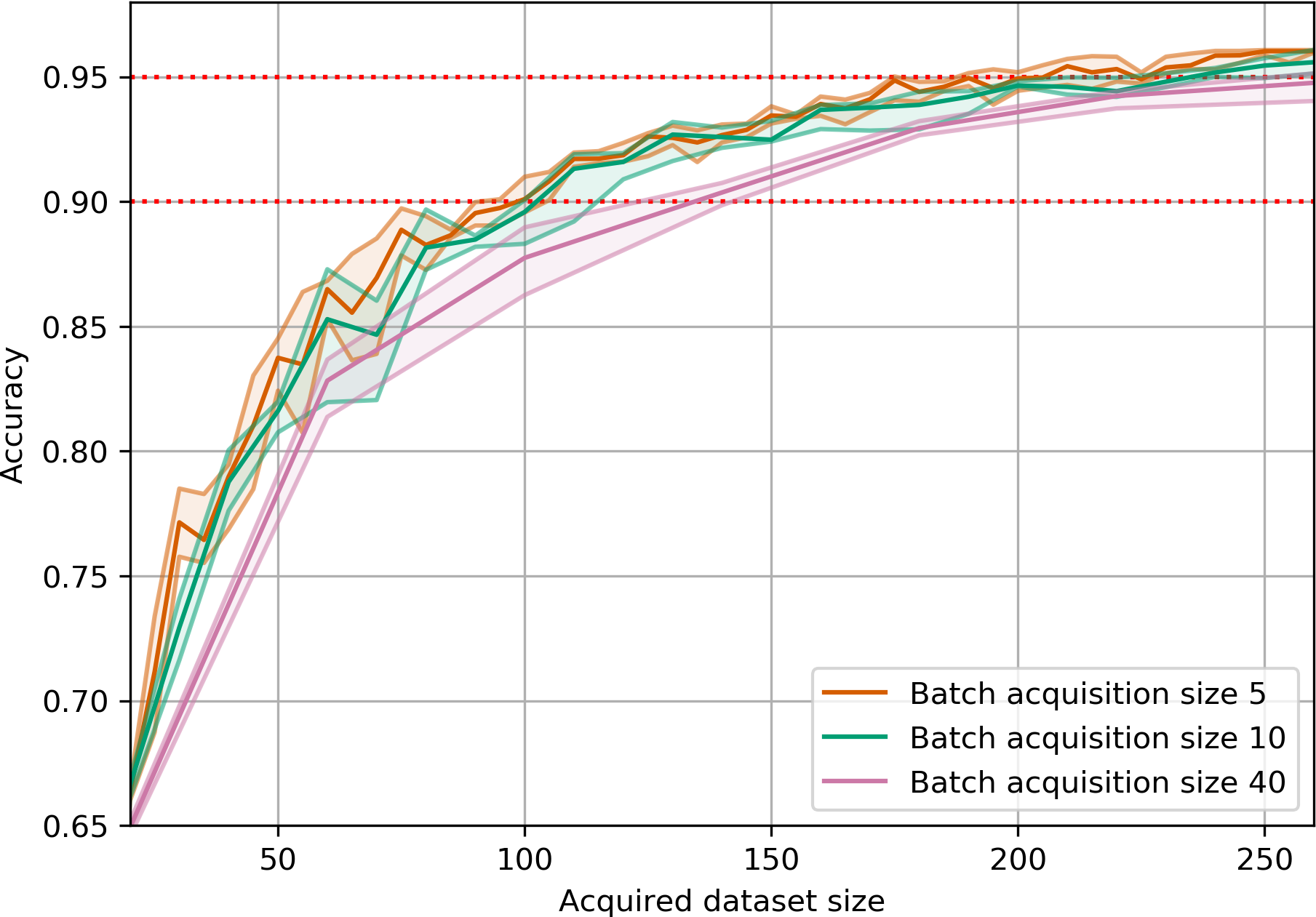

We also played around with different acquisition sizes and found that on MNIST, BatchBALD can even acquire 40 points at a time with little loss of data efficiency while BALD deteriorates quickly.

Final thoughts

We found it quite surprising that a standard acquisition function, used widely in active learning, performed worse even compared to a random baseline, when evaluated on batches of data. We enjoyed digging into the core of the problem, trying to understand why it failed, which led to some new insights about the way we use information theory tools in the field. In many ways, the true lesson here is that when something fails — pause and think.